The blkback/blkfront drivers developed by the original Xen team was lightweight and fast zero-copy protocol that has served well for many years. However, as the number of physical cores and the number of guests have increased on systems, we have begun to identify some bottlenecks to scalability. This prompted a recent improvement to the protocol, called “persistent grants”. This blog post will describe the original protocol and what the source of the bottlenecks are, and then will describe persistent grants, along with experiments demonstrating the scalability improvements.

How PV Driver protocol works

Xen PV drivers for block devices use a special protocol to transfer data from the guest to the devices that act as storage. This protocol is based on a shared ring that allows the exchange of data between the guest and the host. This ring is shared between the guest and the driver domain, which is usually Dom0. The driver domain has access to the physical hardware (a disk) or to the virtual image of the guest, and the guest issues requests to perform operations on this storage.

The capacity of the shared ring is limited, as also are the maximum number of requests in flight at a certain point. To avoid having to allocate a very large amounts of shared memory at start, Xen shared ring allocates at start only the minimum amount of memory to be able to queue the requests and responses (that is a single memory page), but not the data itself. The data is allocated using grants, which are memory areas shared on request, and references to those grants are passed along with the request, so the driver domain can map those areas of memory and perform the requested operations.

To give a clear example, let’s represent a typical request-response cycle. This cycle is always initiated by the guest, which requests several grants (as many as needed to fulfill the request). If the request is a write operation, these grants are filled with the desired data to write to the disk and necessary permissions are given to the driver domain, so it can map the grants (either read only if the request is a write operation, or with write permissions if the request is a read operation). Once we have the grants set up, a reference (the grant reference) is added to the request, and the request is finally queued on the shared ring and the driver domain is notified that it has a pending request.

When the driver domain reads the request, it parses the grant references on the message and maps the grants on the driver domain memory space. When that is done, the driver domain can access this memory as if it was local memory. The request is then fulfilled by the driver domain, and data is read or written to the grants. After the operation has completed, the grants are unmapped, a response is written to the shared ring, and the guest is notified.

Then the guest realizes it has a pending response, it reads it and removes the permissions to share the related grants. After that, the operation is completed.

Scalability and persistent grants

As we can see from the above flow, there is no memory copy, but each request requires the driver domain to perform several mapping and unmapping operations, and each unmapping requires a TLB flush. TLB flushes are expensive operations, and the time required to perform a TLB flush increases with the number of CPUs. Additionally, granting a page from one domain to another requires grabbing the grant lock for each domain. As the number of guests using the same driver domain increases, the contention to this lock becomes significant.

To solve this problem, an extension to the block ring protocol has been added, called “persistent grants“. Persistent grants consist in reusing the same grants for all the block related transactions between the guest and the driver domain, so there’s no need unmap the grants on the driver domain, and TLB flushes are not performed (unless the device is disconnected and all mappings are removed). Furthermore, since grants are done only once, there is no need to grab the driver domain’s grant lock on every transaction.

This of course, doesn’t come for free, since grants are no longer mapped and unmapped, data has to be copied from or to the persistently mapped grant. But for large numbers of guests, the overhead from TLB flushes and lock contention greatly outweighs the overhead of copying.

There are implementations of persistent grants for Linux [1] and NetBSD [2]. Both implementations are very similar, since the code of both operating systems is similar when it comes to the Xen drivers and backends.

The main difference in the frontend driver (the guest driver) is that each time a new grant is needed we request it, and when it is no longer used we don’t free it, but instead add it to a list of persistent grants. At some point, we no longer need to request new grants, since we will have a list of free persistent grants big enough to perform the requests. This list is stored using a slightly linked list, to keep memory overhead at a minimum.

On the backend driver, we perform something similar to the frontend, we also need to keep a list of mapped grants, and we don’t unmap them when the operation is finished. The main difference in the backend is that we receive a reference to the grants the backend has to use, and the backend needs to search where are those grants mapped. To increase the speed associated with this critical path, mapped grant references and their corresponding memory addresses are stored in a red-black tree, which has the property of a worse search time of O(log n). Additions are also more expensive than when using a binary tree or a linked list, but additions to the tree are not frequent, specially once we get to a stable state.

Performance improvement

To check the performance improvement of this implementation, a ram block disk was used to perform the tests. The ram disk has a size of 1G for each guest, the test was run 3 times and the results were averaged.

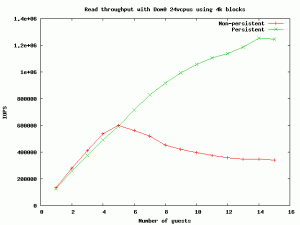

Inside each guest, the fio benchmark was used to run the tests. The first graph corresponds to a read test, using a block size of 4k.

When running more than 6 concurrent guests, there’s a notable speed improvement of the persistent grants implementation, and at 15 guests we are able to perform about 1.24 million IOPS (compared to the previous 340.000 IOPS). As we can see, using persistent grants allows the number of IOPS to scale regarding the number of guests, and the backend is no longer limited by the expensive TLB flush operation. To show a little bit more of information, here is the result of ministat when running 15 concurrent guests, for both a persistent and non-persistent frontend:

x non-persistent

+ persistent

+------------------------------------------------------------------------------------------------+

|x + + +|

|A |__M_A____| |

+------------------------------------------------------------------------------------------------+

N Min Max Median Avg Stddev

x 3 338801 342392 340810 340667.67 1799.7262

+ 3 1206907 1306686 1223131 1245574.7 53542.047

Difference at 95.0% confidence

904907 +/- 85861.6

265.627% +/- 25.2039%

(Student's t, pooled s = 37881.3)

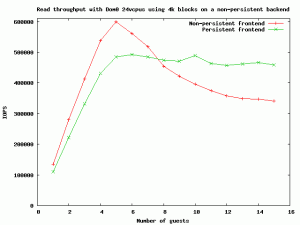

It should be noted that persistent grants can be used in the frontend driver even if the backend driver doesn’t support them. In this case, the backend still does unnecessary TLB flushes, but there will still be reduced lock contention, since the front-end is not repeated granting pages to the driver domain. In the following graph we can see the performance of a traditional (non-persistent) frontend and a persistent frontend with a non-persistent backend

Even if the backend doesn’t support persistent grants, there’s a small speed improvement when using a large number of guests, and the overall performance is much more consistent when using a persistent frontend.

The green function presented in the above graph shows what will happen for example when running several guests with a 3.8 kernel (provided that the persistent grant patches finally make it into 3.8) on a driver domain running a 3.4 kernel.

Summary

To summarize what has been said in this article, there are several key points of this new implementation:

- Â Non-persistent implementation doesn’t require memcpy, but grant operations which require locks in the hypervisor and cause TLB flushes.

- Persistent implementation requires memcpy, but no grant operations.

The main difference regarding computational cost of grant operations or memcpy resides in the fact that memcpy takes a constant time, regardless of the number of guests concurrently running on the same system, while grant operations cost is proportional to the number of guests running on the same host, so as the number of guests increase, also does the cost of grant operations. As we can see from the graph above, the break point at which memcpy is faster than grant operations is between 6 and 7 guests (when running on non-persistent backends).

[1] The initial implementation of persistent grants for Linux was done by Oliver Chick, later development was done by Roger Pau Monné.

[2] Implementation for the frontend driver done by Roger Pau Monné.