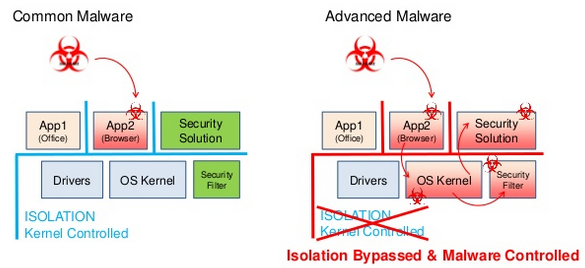

The security threats we’re facing today are becoming increasingly sophisticated. Rootkits, and malware taking advantage of kernel and 0-day vulnerabilities pose especially serious challenges for classic anti-malware solutions, due to the latter’s lack of isolation: they’re typically executing in the same context as the malware they’re trying to prevent.

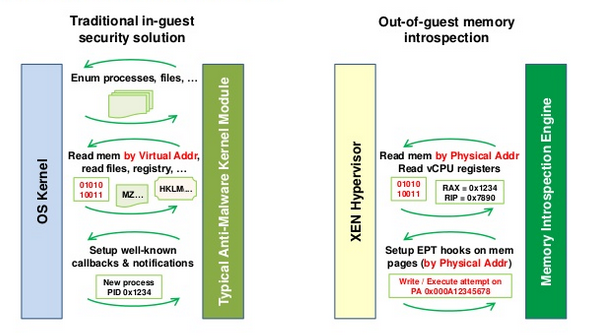

However, via Xen guest memory introspection, the anti-malware application can now live outside of the monitored guest, without relying on functionality that can be rendered unreliable by advanced malware (no agent or any other type of special software needs to run inside the guest). It does this by analyzing the raw memory of the guest OS, and identifying interesting kernel memory areas (driver objects and code, IDT, etc.) and user space memory areas (process code, stack, heap). This is made possible by harnessing existing hardware virtualization extensions (Intel EPT / AMD RVI). We hook the guest OS memory by marking interesting 4K pages as non-execute, or non-write, then audit accesses to those areas in code running in a separate domain.

Using hooks, we can detect that a driver (or kernel module) has been loaded or unloaded, that a new user process has been created, when user stack or heap is being allocated, and when memory is being paged in or out.

Using these techniques, we can protect against known rootkit hooking techniques, protect specific user processes against code injection, function detouring, code executing from the stack or heap areas, and unpacked malicious code. We can also inject remediation tools into the guest on-the-fly (with no help needed from within the guest).

In our quest for zero-footprint guest introspection using Xen, we needed to be able to decide whether changes to the guest OS are benign or malicious, and, once decided, to control whether they are allowed to happen, or rejected.

To that end, we needed a way to be notified of interesting Xen guest events, with a very low overhead, that we could use in dom0 (or a similarly privileged dedicated domain), and that would connect our introspection logic component to the guest.

We’re very happy to announce that the library we’ve created to help us perform virtual machine introspection, libbdvmi, is now on GitHub, under the LGPLv3 license, here. The library is x86-specific, and while there’s some #ifdeferry suggesting that the earliest supported version is Xen 4.3, only Xen 4.6 will work out-of-the-box with it. It has been written from scratch, using libxenctrl and libxenstore directly.

Xen 4.6 is an important milestone, as it has all the features needed to build a basic guest memory introspection application: in addition to support for emulating instructions in response to mem_access events and being able to suppress suspicious writes (which we’ve been able to contribute to 4.5), with the kind help of the Xen developer community we’ve now added support for XCR write vm_events, guest-requested custom vm_events, memory content hiding when instructed to emulate an instruction via a mem_access event response, and denying suspicious CR and MSR writes in response to a vm_event.

While LibVMI is great, and has been considered for the task, it has slightly different design goals:

- in order to work properly, LibVMI requires that you install a configuration file into either $HOME/etc/libvmi.conf or /etc/libvmi.conf, with a set of entries for each virtual machine or memory image that LibVMI will access (while it is possible to use it without extracting this detailed information, doing so severely limits available guest information, and it does reflect a core design concern);

- it uses Glib for caching (which gets indirectly linked with client applications, and so needs to be distributed with the application, or otherwise available on the machine where the application will be deployed);

- it doesn’t publicly offer a way of mapping guest pages from userspace (so that we could write to them directly). For those technically inclined, something along the lines of XenDriver::mapVirtMemToHost();

- it offers no way to ask for a page fault injection in the guest.

Libbdvmi aims to provide a very efficient way of working with Xen to access guest information in an OS-agnostic manner:

- it only connects an introspection logic component to the guest, leaving on-the-fly OS detection and decision-making to it;

- provides a xenstore-based way to know when a guest has been started or stopped;

- has as few external library dependencies as possible – to that end, where LibVMI has used Glib for caching, we’ve only used STL containers, and the only other dependencies are libxenctrl and libxenstore;

- allows mapping guest pages from userspace, with the caveat that this implies mapping a single page for writing, where LibVMI’s buffer-based writes would possibly touch several non-contiguous pages;

- it works as fast as possible – we get a lot of events, so any unnecessary userspace / hypervisor context switches may incur unacceptable penalties (this is why one of our first patches had vm_events carry interesting register values instead of querying the quest from userspace after receiving the event);

- last but not least, since the Xen vm_event code has been in quite a bit of flux, being able to immediately modify the library code to suit a new need, or to update the code for a new Xen version, has been a bonus to the project’s development pace.

We hope that the community will find it useful, and welcome discussion! It would be especially great if as much new functionality as possible makes its way into LibVMI.

Interested in learning more? Our Chief Linux Officer Mihai Dontu will be presenting “Zero-Footprint Memory Introspection with Xen” at LinuxCon North America on Wednesday, Aug. 19. Mihai is currently involved in furthering integrating Bitdefender hypervisor-based memory introspection technology with Xen. There are also two talks about Virtual Machine Introspection at the Xen Project Developer Summit: see Virtual Machine Introspection with Xen and Virtual Machine Introspection: Practical Applications.